Artificial Intelligence: A Danger to Humanity or a New Development?

- Fact Checked by: PokerListings

- Last updated on: February 2, 2024 · 13 minutes to read

What Effects Does Artificial Intelligence Have on Poker Players?

An open letter calling for the halt of artificial intelligence (AI) research was signed in 2023 by almost 2.5K well-known figures from a variety of industries, including Elon Musk, Steve Wozniak, and Christoph Koch.

Because AI development is affecting poker as well — most modern solvers and other player tools use it to compute spots — I decided to investigate the potential risks and benefits for several aspects of human existence.

McLuck Social Casino

T&Cs Apply | Play Responsibly | GambleAware

Terms & Conditions apply

A Direct Message to AI Labs

The letter’s original content, titled “Pause Giant AI Experiments: An Open Letter,” is available on the Future of Life Institute’s non-profit website.

The letter is rather lengthy. To summarize its content, the text states:

- Identifies the primary concerns associated with the continuing race to create AI systems;

- Proposes examining the use of such systems in terms of potential harm to society.

- Highlights the key issues of regulating, managing, and distributing AI; – Describes in broad strokes how to overcome these difficulties.

Before reading the material, make sure you understand the following concepts:

The Asilomar AI Principles are 23 principles for working with artificial intelligence that have been created and approved by top industry experts to mitigate risks.

The text refers to the GPT-4 version of OpenAI’s Chat GPT neural network, which employs cutting-edge AI technology. OpenAI is a machine learning and neural network development business.

Top AI labs are aware that artificial intelligence (AI) systems with intelligence levels comparable to human intellect could be a major threat to humanity and society, as demonstrated by a wealth of research. The Asilomar AI Principles state that advanced AI must be designed and deployed with extreme caution, taking into account the resources that are available, as it has the potential to cause significant changes in the history of life on Earth. Unfortunately, this kind of planning and management does not currently exist, despite the fact that AI labs have been engaged in an unchecked race in recent months to create and implement ever-more-powerful digital minds, the outcomes of which no one, not even their creators, can fully comprehend, predict, or control.

Modern AI systems are able to compete with humans in solving common issues, but we must consider the following:

- Should we let machines broadcast propaganda and lies on information channels?

- Should all labor be automated, even the jobs that make people happy?

- Should we create non-human minds that have the capacity to surpass human intelligence, sheer numbers, and eventual replacement? – Shall we take a chance on losing our civilization?

Leaders in the IT industry who are not elected should not be given such authority. We should only create strong AI systems when we are certain that the risks are acceptable and the benefits will outweigh the drawbacks. This level of trust has to be well-founded and rise in proportion to the possible impact of utilizing the system. The artificial general intelligence company OpenAI stated in a recent statement that “in order to limit the growth rate of computation to create new models, it may at some point be important to obtain an independent assessment of the state of existing AI systems before starting to train new ones.” Yes, we concur. It’s the right time.

All AI labs are hereby called upon to immediately halt training AI systems that score higher than GPT-4 for a minimum of six months. All significant players in the process should acknowledge, validate, and make public this pause. Governments should step in and put a moratorium on it if it cannot be implemented soon enough.

The pause should be used by independent experts and AI labs to collaborate on creating security protocols for advanced AI design and development. These protocols should then be examined and overseen by external experts. These protocols have to guarantee the absolute security of the systems they work on. The evolution of AI as a whole does not stop here, but it does signal a retreat from the risky race toward ever-more-unpredictable “black box” AI models with ever-greater capabilities.

The primary goal of AI research and development should now be to improve the safety, clarity, accuracy, transparency, dependability, loyalty, and trustworthiness of the current modern systems.

The creation of reliable AI control systems should be greatly accelerated by AI developers concurrently. They must, at the very least, consist of:

- Fresh and suitable regulators for AI;

- Oversee and keep an eye on the functioning of powerful AI systems and vast amounts of processing power;

- Use watermarks and provenance marking to detect leaks and separate authentic AI systems from counterfeits;

- A strong ecosystem for audits and certifications;

- Determining who is responsible for harm brought about by AI;

- Stable government support for technological safety research in AI; 7) Institutions ready to handle the profound upheavals AI will bring about on the political and economic fronts, particularly in the area of democracy.

AI has great potential to benefit humanity in the future. If we are successful in creating AI systems with significant power, we will be able to enjoy a “summer of AI” in which we will be able to reap the benefits, evolve the systems for the benefit of society, and allow time for adaptation. Other technologies that might have a disastrous impact on society have already been put on hold. That is also something we can accomplish. Let’s take use of AI’s extended summer without hastily heading into fall without planning.

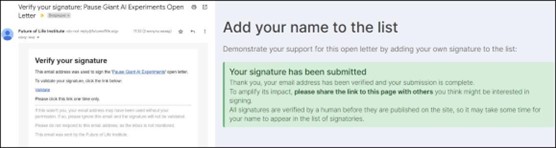

Anyone can sign a letter calling for the suspension of AI development and the implementation of regulations. To do so, at the end of the letter, leave your personal information in English and confirm your signature via email.

A Selection of Well-Known People’s Viewpoints

The letter infuriated some developers, like Bill Akman, CEO of Pershing Square, who thought that a halt to AI research would allow competitors—the “bad guys”—to bridge the gap between their craft AIs and the “official” systems.

Even though Google CEO Sundar Pichai agreed with the letter’s authors, he stated that he did not believe AI development could be stopped at this time:

“Is artificial intelligence a threat to humanity? Quite. It has a wide variety of capabilities, thus the threat is also included. The letter is a fantastic first step toward starting a discussion about this issue, but I don’t see how firms will be able to adopt the remedies offered there on their own. Look, if I write a letter to my engineers instructing them to stop developing AI, does that imply that other companies will do the same? Certainly not. As a result, such choices must be taken and implemented by the authorities. And, most likely, it will take a long period, during which new hazards may develop and must be addressed in some way.”

Liv Boeree explored the risks of AI with Daniel Schmachtenberger, founder of The Consilience Project, who provided his perspective on the subject as a non-developer working in the field of science:

“Artificial intelligence has the ability to optimize practically anything, which is a significant problem in my opinion. For example, it can do good by optimizing a territory’s supply lines, but it can also do bad by optimizing terrorist assaults on them. At the same time, these activities will have almost no difference for him. Furthermore, only a small number of people have the resources and money to devote to the massive investment needed to build AI. However, as soon as a functioning system model is made public and integrated into the network, anyone with the right motivations can use it for very little money.

There can be no doubt about security under any circumstances.”

Daniel Negreanu identified another promising issue related to AI’s widespread availability and lack of control:

“When it comes to defending oneself, how soon will it be that politicians or celebrities who are caught on camera or audio acting inappropriately would say that the footage is a fake created by artificial intelligence? This appears to be a good enough justification for claiming innocence.”

Pulsz Social Casino

T&Cs Apply | Play Responsibly | GambleAware

Terms & Conditions apply

What Dangers Does Humanity Face as Artificial Intelligence Develops?

After compiling the data from the letter, professional comments, and Twitter users, I can identify five main hazards for the advancement of artificial intelligence systems:

- Taking away jobs that AI can do. Unlike industrialization, which introduced new ways of functioning to humanity, artificial intelligence does not introduce anything new; instead, it solves issues in the best way possible. Its continued progress may result in the automation of all manufacturing processes, increasing the number of unemployed. Simultaneously, there is a considerable likelihood that AI will advance faster than society will generate new vocations and job categories. This could result in places unaffected by AI being overrun with individuals who want to work but don’t have enough openings, allowing corporations to substantially decrease pay, capitalizing on the lack of alternatives for employment and upkeep. The population as a whole will become poorer, while a small stratum will become significantly richer, resulting in a major increase in social inequality.

- Changing motivation in decision-making from moral-empathic to rational-mathematical. The use of artificial intelligence to societal problems and moral and ethical quandaries has the potential to dehumanize humans. A human-programmed machine thinks wonderfully in terms of rationality and optimality, but it lacks sentiments and senses, so it does not experience guilt or moral pain – it takes decisions without regard for fairness or honesty.

- Because artificial intelligence (AI) is trained on publicly available human data and because individuals produce a lot of false information, including propaganda, esoteric, pseudoscientific, and other fakes, we shouldn’t ignore the misinformation spread by society. Fake pictures of the Pope and Trump or Biden’s detentions are among the most notable examples of the start of 2023; as a result of their widespread popularity, Midjourney, an image creation service, was even compelled to stop offering their services for free.

- Reduction in value of music, literature, and art, as well as the eradication of copyright. Certain neural networks are already capable of producing text, images, and musical compositions. They do not, of course, contain the author’s idea, but as AI advances, it is possible that systems could produce goods of such high caliber that the labor of humans to create them will become obsolete.

- There has been an explosion of fraud. This is already happening; for example, in 2022, thieves defrauded consumers in the United States of more than $11 million by impersonating AI voices. With the present rate of technological advancement, the risk of being unable to recognize and punish AI scammers will only increase, and ways to guard against them will be successfully resolved by the system.

What Are the Benefits of Artificial Intelligence to Humanity?

- Artificial intelligence’s primary benefit is that it saves people time. Compared to a human, it can process data more quickly and in greater quantities, and even at this early stage, it is able to generate products of this processing that are good enough so that people do not have to spend time meticulously examining them. Firstly, this pertains to problem solving when the best solution must be found, producing texts, images, and music in accordance with technical specifications, when creative expression is not necessary for the author, and examining texts for logical or factual errors.

- The second benefit of AI is the ability to make optimally reasonable decisions. A neural network cannot be coerced, threatened, or otherwise made to do anything against its algorithms. Since it is emotionally indifferent and cannot be pity or convinced of anything, it makes conclusions that are not only fully intellectual but also consider all relevant information. This is terrible for moral and ethical concerns, but it’s a great way to solve technical issues like logistics or infrastructure.

- Another benefit that some developers point out is the lack of cognitive mistakes. Because AI lacks a brain with all of its physiological and mental peculiarities, it is incapable of thinking in the true sense, so any conclusions it makes are risk-free. The risk of a coding error somewhat offsets this benefit. Since the AI is programmed by humans, errors may occur that the machine is unaware of because of the way it is written, which allows the machine to account for technical errors in its conclusions.

- Finally, AI can forecast events based on previous experience and current conditions. As a result, society can obtain recommendations on how to better prepare for and prevent certain disasters.

Is There a Risk to Poker as AI Develops?

Phil Galfond, a poker player and RunItOne founder who have been working with AI poker software for many years, uploaded a video titled “Is AI Killing Poker“? on his channel on March 30, 2023. Phil provides three important points in this video:

Artificial intelligence solvers pose no threat to poker. Although they help to better your poker game, they only provide you with an awareness of the optimal lines and cannot teach you how to play poker brilliantly against all of your opponents’ unique traits.

It is practically impossible for a person to learn every solution poker strategy since there are just too many possible hands, flops, turns, rivers, boards, and opponent moves. Additionally, different disciplines and game formats have different combinations of these moves. Even if you are allowed to utilize the solver directly during the game—something that online poker rooms have long prohibited—you won’t have enough time to enter all of the distribution’s information and do computations, particularly in multi-ways.

Prompters pose a greater risk to poker than do bots (RTAs). Some people may find this to be counterintuitive, but it is a reality. RTA is not a play by itself; rather, a person uses it to make decisions. Rooms can figure it out since the bot plays by itself and acts fairly normally at the table. Bots are frequently launched into rooms in groups, which makes them easier to detect. For example, a group of users with nearly identical stats, who play on a set schedule, utilize shared data for verification, and so on, are easy pickings for the security service.

The use of AI of rooms to identify AI scammers lowers the hazards. Poker rooms are run by intelligent individuals. First, they realize that the presence of “perfect” players harms the company’s reputation and deters amateurs from joining. Second, they stay up to date on the latest poker advancements and are aware of the challenges they face. There is a constant ‘AI arms race’ between scammers and security departments, but it doesn’t impact most players.

In his video, Phil also revealed a surprising truth. He claims that between 70 and 90 percent of the players in the room—the exact percentage varies according on the popularity and degree of regulation—play zero or minus. Since it is inefficient for the security agency to focus its resources on managing the majority of players in this scenario, it keeps an eye on the remaining 30% to 10% of the field, giving particular attention to players who regularly play in the black and have dubious metrics from a distance.

So, Is AI Actually a Threat?

It appears that at this point artificial intelligence systems cause far more harm than good, given that they are unregulated, capable of producing deep-fakes, misleading people, and offering the best answers for actions that are socially dangerous.

Elon Musk and other techno-sphere enthusiasts have raised alarms about the rapid development of neural networks because they believe that if control over the development, use, and general availability of AI is not reinforced, it genuinely has a high probability of becoming the greatest threat to mankind.

We are likely to enter an era where work and life are organized very differently from the ones we have now, favoring better use of time and resources, if developers and governments can manage to regulate and advance AI technology to the point where it is safe and harmless for humans, but also valid and functional.

That’s all what I wanted to share with you today. What is your opinion on AI?

Do you utilize it for playing online poker?

Appeak Poker

T&Cs Apply | Play Responsibly | GambleAware

T&Cs Apply | Play Responsibly | GambleAware

-

Stake.US Poker4.3

- Rakeback 5%

- $55 Stake Cash + 260K Gold Coins

T&Cs Apply | Play Responsibly | GambleAware

18+ | Play Responsibly | T&C Apply

-

Appeak Poker4.1

- 1,000 Chips Daily

- FREE 5,000 Chips

T&Cs Apply | Play Responsibly | GambleAware

T&Cs Apply | Play Responsibly | GambleAware

-

- 150% up to 25 SC

T&Cs Apply | Play Responsibly | GambleAware

Terms & Conditions apply

-

- 5%

- 200% Gold on 1st Purchase

T&Cs Apply | Play Responsibly | GambleAware

Terms & Conditions apply

-

- 2,500 Gold Coins + 0.50 Sweeps Coins

T&Cs Apply | Play Responsibly | GambleAware

18+ | Play Responsibly | T&C Apply